Powering Microsoft Dynamics NAV 2013 on AWS 2

In the earlier article we explored active directory solutions and twotier architecture for Dynamics NAV 2013 on AWS. In this article we will be exploring High Availability SQL setup on EC2 for a production Dynamics NAV implementation.

SQL Server Cluster for Dynamics NAV

This was the trickiest part of the entire architecture. Here are the choices we went through:

RDS based SQL Server

We couldn’t have gotten any simpler than RDS as the default choice for MS SQL server, given our MySQL payloads are also performing very satisfactorily on RDS. However, there exists a key stumbling block which led us against RDS for Dynamics NAV. Dynamics NAV, for better or worse depends on db_owner permission on the master table of the SQL server instance. RDS however doesn’t let anyone tamper master table permissions, including super user. This is a big bummer scenario which led us to endofroad of our RDS considerations for Dynamics NAV .

EC2 SQL Server Enterprise AMI on EC2

This was a peculiar behaviour from Dynamics NAV. EC2 SQL Server AMI ships with an already installed SQL server instance. As it usually goes with SQL Server installations, it is named as ‘MSSQLSERVER’. It is said that in Computer Science, naming is one of the most difficult things to accomplish. Sadly, Dynamics NAV takes this quote to next level by making sure your setup doesn’t go through if your database is named after the default, MSSQLSERVER instance. This, kinda makes sense because Dynamics NAV doesn’t even want to touch the default SQL server installation with a 10foot stick. Sadly, this was also where the EC2 AMI fell short. We could have promptly proceeded with adding one more SQL Server instance in the same node and be done with our business. However, the AMI has no installation media bundled to add a SQL server instance and AWS thinks somehow this is a brilliant idea. If you are somehow very patient and are ready to go back and forth with AWS support, you can request Amazon to provide SQL server installation media. However, we are not convinced about licensing and audit implications of this route.

Using a vanilla Windows 2012 R2 AMI for SQL Server 2012

The only pain point of using a vanilla Windows 2012 R2 instance is taking care of the initial installation, which can be automated easily through chef. The advantage of going

ahead here however is manifold; you will not be billed for SQL license additionally and instead you pay only for what you use from AWS: the EC2 node. Of course, you will be getting an added advantage of having complete say over the SQL features you want to toggle and additional database instances that you want to install. Also, not being lockedin on a special EC2 node due to portability of SQL license is always a goodtohave feature.

MS SQL Cluster High Availability Setup on EC2

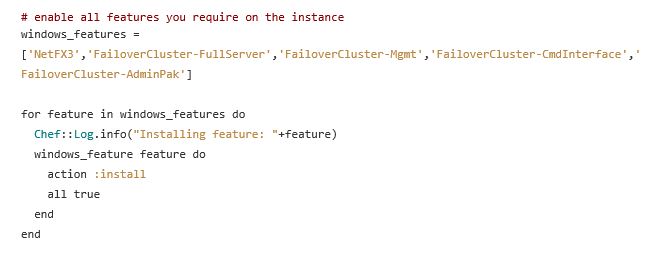

Eventually, we ended up with a 2 node SQL High Availability cluster with Manual Failover. Setting up a MasterReplica for SQL Server by hand has a lot of caveats and requires you to jump through hoops. We decided to go for a MasterSlave configuration here or in SQL Server terminology, a High Availability setup. Here is a short gist. While we haven’t figured out a way to automate entire HA Cluster setup, we did enable the failover clustering feature through a simple windows_feature block in the Chef recipe.

Few things to consider while going for SQL HA Cluster on AWS:

- Go for R family as you are going to need ample memory here along with decent CPU. These are what AWS suggests by default for their database families too.

- Make sure to setup SQL Agent jobs to backup transaction logs and full DB backups to S3.

- Be aware of CAL Licensing terminology where a customer is billed ‘percore’ unless you have a lot of spare money to burn. You will end up buying a CAL License + Core license for half the number of cores on your main server. This can be replicated on one more machine at max per Microsoft terminology. It is absolutely OK to spend some extra time to get the licensing verified before going in for the big purchase considering potential audits in future.

- Make sure to setup automatic failover with an Availability Group Listener. If you configure SQL Server for automatic failover, chances are that at an unwanted period your DB listener might failover and this can lead to needless consequences if you are not using 2 same capacity nodes.

- Be aware of security ports exposed for your SQL machines. We can’t stress this point enough. Don’t expose any port to public as there are enough bruteforce attacks from all over the internet on port 1433 or whatever SQL port you are using to make you sleep deprived just thinking about the exposure. Go with the thumb rule of opening only the bare minimum until something breaks.

Common Issues of SQL Server with Dynamics NAV:

- NAV Desktop/Web client refuses to start due to incorrect privileges on the database. The Active Directory user powering SQL server service needs to have db_owner permission on the master database along with application database.

- Make sure SQL Server Browser service is running for NAV to be able to talk to SQL server.

- Make sure firewall is configured correctly in Windows machine. Since we can restrict ports directly in AWS security group, you may want to keep Windows firewall to bare minimum to avoid double configuration headaches.

Setting up a read replica: Once HA Cluster is setup for SQL, read only access to the secondary DB can be configured easily so that your analytics/reporting teams can work with the live data without slowing down your primary database.

Monitoring

Because a bulk of our systems are Linux based and even our monitoring hosts are Linux based, we wanted to go for a monitoring system that reports to a Linux node.

Sensu + Uchiwa for Monitoring

First order of business was to monitor NAV and SQL services on Sensu. We went a step ahead and integrated alerts to PagerDuty so that DevOps team gets alerted via call/SMS whenever either of these services are down as this can mean delay in the supply/chain if not mitigated quickly.

Secondly, we implemented a plugin on top of Uchiwa for restarting services on the fly without having to do the whole RDP dance routine. While implementing this, we went for a simple

client/server mechanism to remotely trigger a service restart. This could be better handled by WinRM.

Deployments

Deployments that don’t involve schema level changes are automated. Developers have to upload their changes in the form of a .fob binary on a S3 bucket. A SNS notification that is configured for upload events pings a Flask based server running on Docker. This talks to a secondary NAV server via winexe utility and performs download and deploy of the .fob file on the secondary NAV server.

What’s Next?

Offloading NAV job queues

For better performance, we use an additional NAV node which has the sole role of running all the NAV job queues. This is done to minimise load on the main application server. Make sure you don’t install Dynamics NAV web client on this server as you wouldn’t want the same data to be overwritten from multiple places.

WinRM Migration

Instead of running hooks on top of Ruby/Python which run natively on Windows, we are trying to explore native SOAP based WinRM API, which is bundled from Windows 2012 onwards and enabled in EC2 nodes by default. This will open a lot of new avenues for further automation in monitoring, deploy and setup. Currently, the Python Library pywinrm makes it a lot easier to interact with WinRM.

Version Controlled Deployments

As of now, we are uploading a binary to S3 which gets automatically deployed on NAV. A big caveat of this approach is that features and bugfixes are at the whims of developers who are often from third party vendors. Hence business is often opaque about changes that are going live. NAV supports .txt deploys where the .txt file needs to be built and compiled. Once version controlled deploys go live, we can trigger a deploy directly from Bitbucket commit by doing repository polling or pipelines.

This article has been authored by Ganesh Hegde, a DevOps engineer at Urban Ladder. While not fiddling with code and systems Ganesh loves to pretend he is on a diet. Ganesh has a penchant for dark humour.